第6章 章

第 6 章

\chapter{Monte Carlo Simulation of the Estimation}\label{cap3}

\begin{adjustwidth}{2.5cm}{1cm}

\small This Chapter explains .....

\end{adjustwidth}

\vspace{0.5cm}

In this section, four types of parametric estimation methods will be discussed. Moment estimation will be addressed first, followed by the method of Fisher minimum $\chi^2$ estimation with Equiprobable Cells. Lastly, the RP-based minimum $\chi^2$ estimation will be implemented.

\section{Assessment Criteria}

As shown in Table II-XV, in this study, we use RMSE as theparative standard for assessing estimation uracy. RMSE (Root Mean Square Error) is a statistical measure used to assess the uracy of parameter estimates in predictive models [21]. It quantifies the average discrepancy between predicted and observed values, crucial for evaluating the goodness-of-fit in regression analysis and time series forecasting [21]. RMSE helps researchers and analysts gauge the precision of parameter estimates, guiding model refinement and selection [3]. It serves as a fundamental tool in various fields, including economics, environmental science, and finance, where precise parameter estimation is essential for decision-making and policy formulation. RMSE ensures robust and reliable parameter estimation, facilitating more urate predictions and informed decisions [2].

The Root Mean Square Error (RMSE) is calculated using the following formula:

\begin{equation}

RMSE = \sqrt{\frac{1}{n} \sum_{i=1}^{n}(y_i - \hat{y}_i)^2}

\end{equation}

where:\\

1. n is the number of observations.\\

2. $y_i$ is the actual value of the i-th observation.\\

3. $\hat{y}_i$ is the estimated value of the i-th observation.\\

The formulaputes the square root of the average squared differences between the actual and estimated values, providing a measure of the typical deviation of the estimated values from the actual values.

\section{Estimation of three parameters}

We first estimated the three parameters in the Pearson Type III distribution using the method of moment estimation and maximum likelihood estimation. Detailed descriptions follow.

\subsection{The method of moment estimation}

Firstly, review formulas (1) and (2), the raw moments ($m_k $) and central moments ($t_k$) of a probability distribution can be expressed using the probability density function (PDF) ($f(x)$) as follows:\\

1.Raw Moment ($m_k $):\\

\begin{equation}

m_k = \int_{-\infty}^{\infty} x^k f(x) \, dx=E(X^k)

\end{equation}

The raw moment represents the k-th power of the variable x weighted by the probability density function f(x) over the entire range of possible values. It provides information about the distribution's location, spread, and shape.\\

2. Central Moment ($t_k$):\\

\begin{equation}

t_k = \int_{-\infty}^{\infty} (x - E(x))^k f(x) \, dx= E[(X-E(X))^k]

\end{equation}

The central moment is similar to the raw moment but is calculated with respect to the mean (E(X)) of the distribution. It measures the spread or dispersion of the distribution around its mean.

These integrals represent the area under the curve of the PDF weighted by the respective functions of x . They are fundamental in statistical analysis for quantifying various characteristics of probability distributions, such as moments, variance, skewness, and kurtosis.\\

Here, we set\\

$\bar{x}$=$E(X)$, $s^2$=$t_2$=$E[(X-E(X))^2]$ and $t_3$=$E[(X-E(X))^3]$\\

then, recall equations (1.1), (1.3), (1.4), (1.5) ,(3.2) and (3.3), by equating sample moments to theoretical moments, we can get\\

\begin{equation}

E(X)= \int_{\mu}^{\infty} x f(x;\mu, \sigma) \, dx=\mu + \beta \cdot \sigma=\bar{x}

\end{equation}

\begin{equation}

Var(X)=\int_{\mu}^{\infty} (x - E(x))^2 f(x) \, dx=\sigma^2 \cdot \beta=s^2

\end{equation}

\begin{equation}

\gamma(X) = \frac{E[(X - E(X)^3]}{SD^3} = \frac{2}{\sqrt{\beta}} = \frac{t^3}{s^3}

\end{equation}\\

where, SD represents the standard deviation\\

ording to (3.4), (3.5) and (3.6), $\hat{\mu}$, $\hat{\sigma}$ and $\hat{\beta}$, the moment estimators for $\mu$, $\sigma$ and $\beta$, are solved to be\\

\begin{equation}

\hat{\mu}=\bar{x}-s\sqrt{\frac{4s^6}{t_3^2}}

\end{equation}

\begin{equation}

\hat{\sigma}=\frac{s}{\sqrt{\frac{4s^6}{t_3^2}}}

\end{equation}

\begin{equation}

\hat{\beta} = \frac{4s^6}{t_3^2}

\end{equation}\\

\subsection{The maximum likelihood estimation method}

ording to Equations (1.1) and (2.3), the likelihood function of the Pearson type III distribution is written as follows.

\begin{equation}

\begin{aligned}

& L(\theta)=\prod_{i=1}^n f\left(x_{i}; \mu, \sigma, \beta\right)\\

& = \prod_{i=1}^n \frac{1}{{\sigma^\beta}{\Gamma(\beta)}}(x-\mu)^{\beta-1} \cdot e^{-\frac{x-\mu}{\sigma}}\\

& = \sigma^{-n \beta} \cdot[\Gamma(\beta)]^{-n} \cdot \prod_{i=1}^n\left(x_i-\mu\right)^{\beta-1} \cdot e^{-\frac{1}{\sigma} \sum_{i=1}^n\left(x_i-\mu\right)}

\end{aligned}

\end{equation}\\

where, $\theta=(\mu, \sigma, \beta)$.\\

Then, taking natural log on (3.10), we attain that

\begin{equation}

\begin{aligned}

& l(\theta)=\log L(\theta)=-n \beta-n \log \Gamma(\beta)+(\beta-1) \sum_{i=1}^n \log \left(x_i-\mu\right)-\frac{1}{\sigma} \sum_{i=1}^n\left(x_i-\mu\right)

\end{aligned}

\end{equation}\\

By applying the condition mentioned in (2.5), we attain that the system of equations to be solved contains\\

\begin{equation}

\frac{\partial l(\theta)}{\partial \mu}=-(\beta-1) \sum_{i=1}^n \frac{1}{x_i-\mu}+\frac{n}{\sigma}=0

\end{equation}

\begin{equation}

\frac{\partial l(\theta)}{\partial \sigma}=-\frac{n \beta}{\sigma}+\frac{1}{\sigma^2} \sum_{i=1}^n\left(x_i-\mu\right)=0

\end{equation}

\begin{equation}

\frac{\partial l(\theta)}{\partial \beta}=-n \log \sigma-n \psi(\beta)+\sum_{i=1}^n \log \left(x_i-\mu\right)=0

\end{equation}

where, $\psi(\beta)=\frac{\partial \ln \Gamma(\beta)}{\partial \beta}$.\\

ording to (3.12), (3.13) and (3.14), we cannot get the algebraic solutions for the above three parameters. In this case, we can construct a simulation study to calculate the estimator $\hat{\mu}$, $\hat{\sigma}$ and $\hat{\beta}$. The details of the simulation procedures are discussed in the following content.

\subsection{Procedures of Estimations}

The Monte Carlo simulation process of estimation for the three parameters in the Pearson type III distribution can be divided into the following steps:

\noindent\textbf{Step 1} Determine the true values for $\mu$, $\sigma$ and $\beta$.\\

\textbf{Step 2} Randomly take n samples following the Pearson type III distribution with parameters $\mu$, $\sigma$ and $\beta$.\\

\textbf{Step 3} Calculate $\hat{\mu}$, $\hat{\sigma}$ and $\hat{\beta}$ by applying the formulas in (3.7), (3.8) and (3.9) for moment estimation, however, for MLE, we need an algorithm to solve equations (3.12), (3.13) and (3.14).\\

\textbf{Step 4} Repeat steps 2 and 3 for N times, all of the above processes are performed in R 4.3.1.\\

The following table includes the detailed specification for each step:

\begin{table}[h]

\centering

\begin{tabular}{|c|c|}

\hline

Sample sizes \(n\) & 50, 100, 500, and 1000 \\

\hline

Seven different assignments for \(\mu\), \(\sigma\), and \(\beta\) & (3,1,1), (3,1,2), (3,1,3), \\

& (3,2,1), (3,3,1), (2,1,1), and (1,1,1) \\

\hline

Iterations & \(N = 1000\) \\

\hline

\end{tabular}

\end{table}

\subsection{Estimation Results of Simulation}

Tables $3.1-3.7$ present the results of moment estimation, while Tables $3.8-3.14$ present the results of Maximum Likelihood Estimation. In next subsection, we conduct a detailedparative analysis of these results.\\

\begin{table}[!htbp]

\caption{Moment Estimation and RMSE for $\mu$=3, $\sigma$=1, $\beta$=1}

\centering

\begin{tabular}{|c|c|c|c|c|}

\hline

$n$ & 50 & 100 & 500 & 1000 \\

\hline

$\hat{\mu}$ & 2.6429 & 2.7982 & 2.9539 & 2.976 \\

$\hat{\sigma}$ & 0.8136 & 0.8876 & 0.9759 & 0.9885 \\

$\hat{\beta}$ & 2.3482 & 1.6643 & 1.1393 & 1.0722 \\

RMSE($\hat{\mu}$) & 0.524 & 0.3342 & 0.1411 & 0.1025 \\

RMSE($\hat{\sigma}$) & 0.4584 & 0.3783 & 0.2059 & 0.1498 \\

RMSE($\hat{\beta}$) & 2.5026 & 1.193 & 0.3803 & 0.256 \\

\hline

\end{tabular}

\end{table}

\begin{table}[!htbp]

\caption{Moment Estimation and RMSE for $\mu$=3, $\sigma$=1, $\beta$=2}

\centering

\begin{tabular}{|c|c|c|c|c|}

\hline

$n$ & 50 & 100 & 500 & 1000 \\

\hline

$\hat{\mu}$ & 2.1958 & 2.5977 & 2.907 & 2.9524 \\

$\hat{\sigma}$ & 0.862 & 0.917 & 0.9809 & 0.9918 \\

$\hat{\beta}$ & 6.0924 & 3.3176 & 2.2813 & 2.1342 \\

RMSE($\hat{\mu}$) & 1.6038 & 0.764 & 0.3152 & 0.2157 \\

RMSE($\hat{\sigma}$) & 0.4727 & 0.3795 & 0.2099 & 0.1445 \\

RMSE($\hat{\beta}$) & 20.3582 & 2.7083 & 0.8195 & 0.5199 \\

\hline

\end{tabular}

\end{table}

\begin{table}[!htbp]

\caption{Moment Estimation and RMSE for $\mu$=3, $\sigma$=1, $\beta$=3}

\centering

\begin{tabular}{|c|c|c|c|c|}

\hline

$n$ & 50 & 100 & 500 & 1000 \\

\hline

$\hat{\mu}$ & 1.1751 & 2.3175 & 2.853 & 2.9107 \\

$\hat{\sigma}$ & 0.8698 & 0.9073 & 0.9781 & 0.9855 \\

$\hat{\beta}$ & 49.9423 & 5.3878 & 3.4273 & 3.2425 \\

RMSE($\hat{\mu}$) & 9.5964 & 1.3937 & 0.4825 & 0.3379 \\

RMSE($\hat{\sigma}$) & 0.4831 & 0.3695 & 0.1977 & 0.1433 \\

RMSE($\hat{\beta}$) & 889.324 & 5.7321 & 1.2264 & 0.8147 \\

\hline

\end{tabular}

\end{table}

\begin{table}[!htbp]

\caption{Moment Estimation and RMSE for $\mu$=3, $\sigma$=2, $\beta$=1}

\centering

\begin{tabular}{|c|c|c|c|c|}

\hline

$n$ & 50 & 100 & 500 & 1000 \\

\hline

$\hat{\mu}$ & 2.2727 & 2.6162 & 2.9056 & 2.9494 \\

$\hat{\sigma}$ & 1.6357 & 1.8062 & 1.9616 & 1.9775 \\

$\hat{\beta}$ & 2.4406 & 1.6247 & 1.1405 & 1.0732 \\

RMSE($\hat{\mu}$) & 1.1067 & 0.6524 & 0.2891 & 0.2104 \\

RMSE($\hat{\sigma}$) & 0.9879 & 0.7912 & 0.4399 & 0.3088 \\

RMSE($\hat{\beta}$) & 3.3107 & 1.1244 & 0.3810 & 0.2601 \\

\hline

\end{tabular}

\end{table}

\begin{table}[!htbp]

\caption{Moment Estimation and RMSE for $\mu$=3, $\sigma$=3, $\beta$=1}

\centering

\begin{tabular}{|c|c|c|c|c|}

\hline

$n$ & 50 & 100 & 500 & 1000 \\

\hline

$\hat{\mu}$ & 1.9091 & 2.4243 & 2.8584 & 2.924 \\

$\hat{\sigma}$ & 2.4536 & 2.7092 & 2.9424 & 2.9662 \\

$\hat{\beta}$ & 2.4406 & 1.6247 & 1.1405 & 1.0732 \\

RMSE($\hat{\mu}$) & 1.6601 & 0.9786 & 0.4337 & 0.3156 \\

RMSE($\hat{\sigma}$) & 1.4818 & 1.1868 & 0.6599 & 0.4632 \\

RMSE($\hat{\beta}$) & 3.3107 & 1.1244 & 0.3810 & 0.2601 \\

\hline

\end{tabular}

\end{table}

\begin{table}[!htbp]

\caption{Moment Estimation and RMSE for $\mu$=2, $\sigma$=1, $\beta$=1}

\centering

\begin{tabular}{|c|c|c|c|c|}

\hline

$n$ & 50 & 100 & 500 & 1000 \\

\hline

$\hat{\mu}$ & 1.6429 & 1.7982 & 1.9539 & 1.976 \\

$\hat{\sigma}$ & 0.8136 & 0.8876 & 0.9759 & 0.9885 \\

$\hat{\beta}$ & 2.3482 & 1.6643 & 1.1393 & 1.0722 \\

RMSE($\hat{\mu}$) & 0.524 & 0.3342 & 0.1411 & 0.1025 \\

RMSE($\hat{\sigma}$) & 0.4584 & 0.3783 & 0.2059 & 0.1498 \\

RMSE($\hat{\beta}$) & 2.5026 & 1.193 & 0.3803 & 0.256 \\

\hline

\end{tabular}

\end{table}

\begin{table}[!htbp]

\caption{Moment Estimation and RMSE for $\mu$=1, $\sigma$=1, $\beta$=1}

\centering

\begin{tabular}{|c|c|c|c|c|}

\hline

$n$ & 50 & 100 & 500 & 1000 \\

\hline

$\hat{\mu}$ & 0.6429 & 0.7982 & 0.9539 & 0.976 \\

$\hat{\sigma}$ & 0.8136 & 0.8876 & 0.9759 & 0.9885 \\

$\hat{\beta}$ & 2.3482 & 1.6643 & 1.1393 & 1.0722 \\

RMSE($\hat{\mu}$) & 0.524 & 0.3342 & 0.1411 & 0.1025 \\

RMSE($\hat{\sigma}$) & 0.4584 & 0.3783 & 0.2059 & 0.1498 \\

RMSE($\hat{\beta}$) & 2.5026 & 1.193 & 0.3803 & 0.256 \\

\hline

\end{tabular}

\end{table}

\begin{table}[!htbp]

\caption{MLE and RMSE for $\mu=3, \sigma=1, \beta=1$}

\centering

\begin{tabular}{|c|c|c|c|c|}

\hline

$n$ & 50 & 100 & 500 & 1000 \\

\hline

$\hat{\mu}$ & 2.9971 & 3.0004 & 3.0001 & 3.0000 \\

$\hat{\sigma}$ & 1.0018 & 1.0363 & 1.0504 & 1.0640 \\

$\hat{\beta}$ & 1.0889 & 1.0549 & 1.0600 & 1.0694 \\

RMSE($\hat{\mu}$) & 0.0192 & 0.0063 & 0.0010 & 0.0005 \\

RMSE($\hat{\sigma}$) & 0.1344 & 0.0855 & 0.0851 & 0.0933 \\

RMSE($\hat{\beta}$) & 0.1740 & 0.0894 & 0.0852 & 0.0936 \\

\hline

\end{tabular}

\end{table}

\begin{table}[!htbp]

\caption{MLE and RMSE for $\mu=3, \sigma=1, \beta=2$}

\centering

\begin{tabular}{|c|c|c|c|c|}

\hline

$n$ & 50 & 100 & 500 & 1000 \\

\hline

$\hat{\mu}$ & 3.1030 & 3.0461 & 3.0008 & 2.9988 \\

$\hat{\sigma}$ & 1.0354 & 1.0108 & 1.0034 & 1.0022 \\

$\hat{\beta}$ & 1.8206 & 1.9197 & 1.9822 & 1.9888 \\

RMSE($\hat{\mu}$) & 0.1500 & 0.0868 & 0.0242 & 0.0145 \\

RMSE($\hat{\sigma}$) & 0.1342 & 0.0834 & 0.0468 & 0.0345 \\

RMSE($\hat{\beta}$) & 0.2594 & 0.1818 & 0.1066 & 0.0744 \\

\hline

\end{tabular}

\end{table}

\begin{table}[!htbp]

\caption{MLE and RMSE for $\mu=3, \sigma=1, \beta=3$}

\centering

\begin{tabular}{|c|c|c|c|c|}

\hline

$n$ & 50 & 100 & 500 & 1000 \\

\hline

$\hat{\mu}$ & 3.4092 & 3.2893 & 3.1199 & 3.0734 \\

$\hat{\sigma}$ & 1.4339 & 1.3088 & 1.1104 & 1.0622 \\

$\hat{\beta}$ & 1.8390 & 2.1037 & 2.6070 & 2.7614 \\

RMSE($\hat{\mu}$) & 0.4589 & 0.3289 & 0.1454 & 0.0960 \\

RMSE($\hat{\sigma}$) & 0.4822 & 0.3451 & 0.1376 & 0.0875 \\

RMSE($\hat{\beta}$) & 1.1776 & 0.9268 & 0.4519 & 0.3024 \\

\hline

\end{tabular}

\end{table}

\begin{table}[!htbp]

\caption{MLE and RMSE for $\mu=3, \sigma=2, \beta=1$}

\centering

\begin{tabular}{|c|c|c|c|c|}

\hline

$n$ & 50 & 100 & 500 & 1000 \\

\hline

$\hat{\mu}$ & 2.9945 & 2.9978 & 3.0006 & 3.0003 \\

$\hat{\sigma}$ & 1.8414 & 1.9692 & 2.0369 & 2.0413 \\

$\hat{\beta}$ & 1.1178 & 1.0602 & 1.0459 & 1.0465 \\

\text{RMSE}($\hat{\mu}$) & 0.034 & 0.0152 & 0.0022 & 0.001 \\

\text{RMSE}($\hat{\sigma}$) & 0.3739 & 0.2011 & 0.0768 & 0.0701 \\

\text{RMSE}($\hat{\beta}$) & 0.2121 & 0.1248 & 0.0651 & 0.0659 \\

\hline

\end{tabular}

\end{table}

\begin{table}[!htbp]

\caption{MLE and RMSE for $\mu=3, \sigma=3, \beta=1$}

\centering

\begin{tabular}{|c|c|c|c|c|}

\hline

$n$ & 50 & 100 & 500 & 1000 \\

\hline

$\hat{\mu}$ & 3.0032 & 2.9939 & 3.0006 & 3.0007 \\

$\hat{\sigma}$ & 2.6142 & 2.8153 & 3.0089 & 3.0307 \\

$\hat{\beta}$ & 1.1252 & 1.0795 & 1.0382 & 1.0374 \\

\text{RMSE}($\hat{\mu}$) & 0.0552 & 0.0246 & 0.0078 & 0.0019 \\

\text{RMSE}($\hat{\sigma}$) & 0.6651 & 0.4537 & 0.1328 & 0.062 \\

\text{RMSE}($\hat{\beta}$) & 0.2025 & 0.1591 & 0.0668 & 0.0534 \\

\hline

\end{tabular}

\end{table}

\begin{table}[!htbp]

\caption{MLE and RMSE for $\mu=2, \sigma=1, \beta=1$}

\centering

\begin{tabular}{|c|c|c|c|c|}

\hline

$n$ & 50 & 100 & 500 & 1000 \\

\hline

$\hat{\mu}$ & 1.9970 & 2.0002 & 2.0001 & 2.0000 \\

$\hat{\sigma}$ & 1.0015 & 1.0358 & 1.0505 & 1.0639 \\

$\hat{\beta}$ & 1.0893 & 1.0561 & 1.0600 & 1.0694 \\

RMSE($\hat{\mu}$) & 0.0194 & 0.0074 & 0.0010 & 0.0004 \\

RMSE($\hat{\sigma}$) & 0.1348 & 0.0869 & 0.0851 & 0.0932 \\

RMSE($\hat{\beta}$) & 0.1748 & 0.0947 & 0.0853 & 0.0935 \\

\hline

\end{tabular}

\end{table}

\begin{table}[!htbp]

\caption{MLE and RMSE for $\mu=1, \sigma=1, \beta=1$}

\centering

\begin{tabular}{|c|c|c|c|c|}

\hline

$n$ & 50 & 100 & 500 & 1000 \\

\hline

$\hat{\mu}$ & 0.9970 & 1.0003 & 1.0001 & 1.0000 \\

$\hat{\sigma}$ & 1.0007 & 1.0364 & 1.0504 & 1.0639 \\

$\hat{\beta}$ & 1.0892 & 1.0554 & 1.0600 & 1.0694 \\

RMSE($\hat{\mu}$) & 0.0193 & 0.0069 & 0.0010 & 0.0005 \\

RMSE($\hat{\sigma}$) & 0.1349 & 0.0856 & 0.0850 & 0.0931 \\

RMSE($\hat{\beta}$) & 0.1738 & 0.0913 & 0.0853 & 0.0935 \\

\hline

\end{tabular}

\end{table}

\subsection{parative Analysis}

The above data tables provide a detailed presentation of the results from multiple simulations, including estimates $\hat{\mu}$, $\hat{\sigma}$, and $\hat{\beta}$ under different sample sizes n, along with their corresponding RMSE values. The following is a summary and analysis of the results.

\subsubsection{Influence of Sample Size}

From the tables, it can be observed that as the sample size increases, the RMSE of each estimate decreases, indicating an improvement in the uracy of the estimates with increasing sample size. At the same time, the estimates of \(\hat{\mu}\), \(\hat{\sigma}\), and \(\hat{\beta}\) also exhibit a trend of gradual stabilization with increasing sample size.

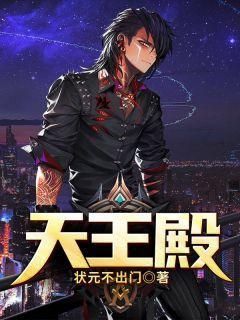

\begin{figure}[ht!] %!t

\centering

\includegraphics[width=3.5in]{setting1.png}

\caption{The variation trend of each parameter estimators and RMSE with sample size of MOM and MLE in setting1: $\mu$ = 3, $\sigma$ = 1, and $\beta$ = 1}

\label{LP}

\end{figure}

\subsubsection{MOM vs MLE}

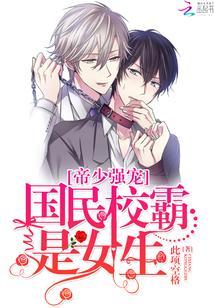

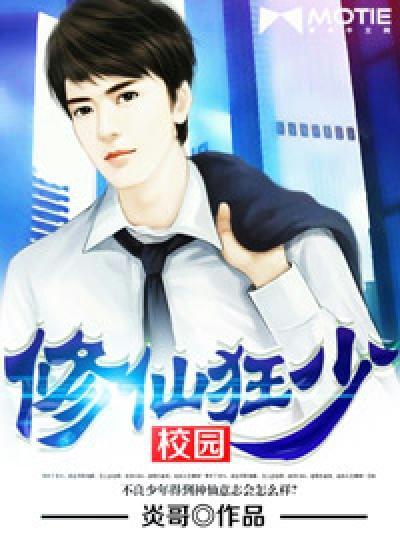

Additionally, it also can be observed that there is some difference between the two different methods for a given sample size and set conditions. Especially for small sample sizes (n=50, 100), moment estimates tend to be more unstable than maximum likelihood estimates. With the increase of the sample size, the stability of both the moment estimation and the maximum likelihood estimation is significantly improved, but the difference between them still exists. Figures 3.1 to 3.3 respectively illustrate the visual results of setting 1, setting 2, and setting 4. It can be observed from the figures that, across all samples, the RMSE values corresponding to MLE are smaller, indicating that MLE has better uracypared to MOM. \\

\begin{figure}[ht!] %!t

\centering

\includegraphics[width=3.5in]{setting2.png}

\caption{The variation trend of each parameter estimators and RMSE with sample size of MOM and MLE in setting2: $\mu$ = 3, $\sigma$ = 1, and $\beta$ = 2}

\label{LP}

\end{figure}

\begin{figure}[ht!] %!t

\centering

\includegraphics[width=3.5in]{setting4.png}

\caption{The variation trend of each parameter estimators and RMSE with sample size of MOM and MLE in setting4: $\mu$ = 3, $\sigma$ = 2, and $\beta$ = 1}

\label{LP}

\end{figure}

\section{Estimation of two parameters}

In this section, we will demonstrate the detailed process of the two parameter estimation. That is to say, we first assign a value to the shape parameter $\beta$, and then sequentially use the four methods introduced in the methodology to estimate the location parameter $\mu$ and the scale parameter $\sigma$.

\subsection{The method of moment estimation}

Here, we set $\beta=1$, then (1.1) can be written as

\begin{equation}

f(x ; \mu, \sigma)=\frac{1}{\sigma} e^{-\frac{x-\mu}{\sigma}} \quad, \quad x>\mu

\end{equation}

Under this case, (3.4) and (3.5) should be replaced with

\begin{equation}

E(X)=\int_\mu^{\infty} x \cdot \frac{1}{\sigma} e^{-\frac{x-\mu}{\sigma}} d x=\mu+\sigma=\bar{x}

\end{equation}

\begin{equation}

Var(X)=\sigma^2=s^2

\end{equation}

Then, recall (3.7) and (3.8), the moment estimates of $\mu$ and $\sigma$ are $\hat{\sigma}=s$ and $\hat{\mu}=\bar{x}-s$ respectively.

\subsection{The maximum likelihood estimation method}

In this case, since $f\left(x_i; \mu, \sigma\right)$ is a monotonically decreasing function of $\mu$, and $\mu \leq x_i$, so MLE of $\mu$ is

\begin{equation}

\hat{\mu}=min(x_i)

\end{equation}

Then, recall (3.10) and (3.11), we can get the likelihood function and log-likelihood function under this case:

\begin{equation}

L(x ; \mu, \sigma)=\frac{1}{\sigma^n} \cdot e^{-\frac{1}{\sigma} \sum_{i=1}^n\left(x_i-\mu\right)}

\end{equation}

\begin{equation}

l(x ; \mu, \sigma)=-n \ln (\sigma)-\frac{1}{\sigma} \sum_{i=1}^n\left(x_i-\mu\right)

\end{equation}

Similarly, (3.13) can be written as

\begin{equation}

\frac{\partial l}{\partial \sigma}=-\frac{n}{\sigma}+\frac{1}{\sigma^2} \sum_{i=1}^n\left(x_i-\mu\right)=0

\end{equation}

By (3.18) and (3.21), we can get the MLE of $\sigma$ is

\begin{equation}

\hat{\sigma}=\frac{\sum_{i=1}^n\left(x_i-\hat{\mu}\right)}{n}=\bar{x}-\hat{\mu}

\end{equation}

\subsection{Fisher Minimum $\chi^2$ Estimation with Equiprobable Cells}

The Fisher minimum chi-square estimation method is based on minimizing the Pearson-Fisher chi-square statistic

$$

\chi_n^2(\theta)=\sum_{i=1}^m \frac{\left(N_i-n p_i(\theta)\right)^2}{n p_i(\theta)} .

$$

With $\mu=0$ and $\sigma=1$, the PDF of Pearson type III distribution is given by

\begin{equation}

f(x ; 0, 1)=e^{-x} \quad, \quad x>0

\end{equation}

The CDF of this distribution is

\begin{equation}

F(x;0,1) = 1-e^{-x}

\end{equation}

Let $\Delta_1, \Delta_2, \ldots \Delta_m$ to be equiprobable points for the standard Gumbel distribution.

Then, the classification can be given by

$$

p_1(0,1)=\int_{-\infty}^{\Delta_1} \boldsymbol{f}(x ; 0,1) d x=p_2(0,1)=\int_{\Delta_1}^{\Delta_2} \boldsymbol{f}(x ; 0,1) d x=\cdots=\frac{1}{m}

$$

For Gumble distribution,

$$

p_i(0,1)=\left\{\begin{array}{lc}

\int_{-\infty}^{\Delta_1} f(x ; 0,1) d x=e^{-e^{-\Delta_1}}, & \text { for } i=1 \\

\int_{\Delta_{i-1}}^{\Delta_{i-1}} f(x ; 0,1) d x=e^{-e^{-\Delta_i}}-e^{-e^{-\Delta_{i-1}},}, & \text { for } 1 \int_1^{\infty} f(x ; 0,1) d x=-e^{-e^{-\Delta_m}}, & \text { for } i=m \end{array}\right. $$ Obtaining $\Delta_i$ by letting $p_i(0,1)=\frac{1}{m}$ and defining the cells: $$ \begin{gathered} J_1=\left(-\infty, \hat{\mu}+\hat{\sigma} \Delta_1\right), \ldots J_{m+1}=\left(\hat{\mu}+\hat{\sigma} \Delta_m,+\infty\right), \\ j=1, \ldots, m+1 \end{gathered} $$ where the $\hat{\mu}$ and $\hat{\sigma}$ are the results of MLE for the given parameters. Let $$ N_i=\operatorname{Card}\left\{X_j \in J_i: j=1, \ldots, n\right\}, i=1, \ldots, m+1 $$ Define the cells probabilities: $$ p_i(\mu, \sigma)=\int_{J_i} f(x ; \mu, \sigma) d x, i=1, \ldots, m+1 $$ For gumble distribution, Pearson-Fisher's minimum chi-square estimator satisfies $$ \sum_{i=1}^m \frac{N_i}{p_i(\theta)} \cdot \frac{\partial p_i(\theta)}{\partial \theta_j}=0, j=1,2, \theta=(\mu, \sigma) $$ \subsection{RP-based Minimum $\chi^2$ Estimation} The cells in defining the chi-square equations for RP-minimum chi-square estimation are different from the Fisher Minimum chi-square estimation's. The cells in Fisher Minimum chi-sqaure estimation are defined by a certain amount of equiprobable points, whereas the cells in RP-minimum chisquare estimation are defined by the representative points (RPs). Finding representative points is based on the idea to find discrete distribution to approximate continuous distribution. By introducing the loss function, the optimal RPs have the best representative performance to minimize the loss function. Let $\left\{R_i^0: i=, \ldots, m\right\}$ be a set of RPs (representative points) obtained by existing algorithm from standard Gumbel function. Then a set of RPs from $f(x ; \mu, \sigma)$, which stands for Gumbel function with non-standard parameters, can be estimated by \begin{equation} R_i=\hat{\mu}+\hat{\sigma} R_i^0, i=1, \ldots, m \end{equation} where $\hat{\mu}$ and $\hat{\sigma}$ are MLEs of real parameters $\mu$ and $\sigma$, separately. Define the cells: \begin{equation} \begin{gathered} I_1=\left(-\infty, \frac{R_1+R_2}{2}\right), \ldots, I_{m-1}=\left(\frac{R_{m-1}+R_m}{2},+\infty\right), \\ j=2, \ldots, m-1 . \end{gathered} \end{equation} The RP minimum chi-square estimators are the solution to equation 2.6 . The algorithm of RP-minimum chi-square estimation is similar to the Fisher-minimum chi-square estimation’s. \subsection{Procedures of Estimations} \subsubsection{ Moment and Maximum Likelihood Estimations} The moment estimators, derived as previously described, are denoted by (2.8) and (2.9). As these results are algebraic solutions, constructing the procedures is straightforward, as outlined below: \begin{enumerate} \item \textbf{Step 1:} Determine the true values for $v$ ($v > 2$), $\mu$, and $\sigma$. \item \textbf{Step 2:} Randomly select $n$ samples following the generalized Student's $t$-distribution with parameters $v$ ($v > 2$), $\mu$, and $\sigma$. \item \textbf{Step 3:} Calculate $\hat{\mu}$ and $\hat{\sigma}$ using the formulas in equations (2.8) and (2.9), respectively. \item \textbf{Step 4:} Repeat steps 2 and 3 for $N$ iterations, andpute the Root Mean Square Error (RMSE) using the formula in equation (2.24). \end{enumerate} The maximum likelihood estimators follow the formulas (2.17) and (2.18), derived as before. While $\hat{\mu}$ can be expressed algebraically, $\hat{\sigma}$ cannot. Therefore, the procedures differ slightly from those of moment estimation. \begin{enumerate} \item \textbf{Step 1:} Determine the true values for $v$ ($v > 2$), $\mu$, and $\sigma$. \item \textbf{Step 2:} Randomly select $n$ samples following the generalized Student's $t$-distribution with parameters $v$ ($v > 2$), $\mu$, and $\sigma$. \item \textbf{Step 3:} Calculate $\hat{\mu}$ using the formula in equation (2.17). Use the Newton-Raphson method to numerically solve equation (2.18) for $\hat{\sigma}$ (the "fsolve" built-in function in MATLAB could be applied). \item \textbf{Step 4:} Repeat steps 2 and 3 for $N$ iterations, andpute the RMSE using the formula in equation (2.24). \end{enumerate} \subsubsection{ Fisher Minimum $\chi^2$ Estimations} For the two minimum $\chi^2$ estimations, the procedures differ from the previous ones. Initially, we must determine the true values and the maximum likelihood estimators of the parameters, which is no different from steps 1 to 3 of the maximum likelihood estimation procedures. Next, it is crucial to generate $m$ cells of interest over the support of the generalized Student's $t$-distribution, i.e., all real numbers. Since the generalized Student's $t$-distribution is a location-scale family extended by the standard Student's $t$-distribution, we can first generate $m$ cells of interest with respect to the standard distribution, and then linearly transform them using the maximum likelihood estimators of the parameters. This transformation makes the transformed cells approximately the ideal cells of interest \textit{w.r.t} the generalised distribution. Following this, (2.23) should be determined and solved by MATLAB built-in function ”fsolve”. The parameters that are solved are called minimum $\chi^2$ estimators. By repeating the above steps for $N$ times, the RMSE should be calculated by applying (2.24). The detailed steps are listed as follows. \begin{enumerate} \item Step 1: Determine the true values for $v (v > 2)$, $\mu$, and $\sigma$. \item Step 2: Generate $m$ cells with respect to the standard Student’s $t$ distribution, denoted by $J_i$, where $i = 1, \ldots, m$. \item Step 3: Randomly take $n$ samples following the generalized Student’s $t$-distribution with parameters $v (v > 2)$, $\mu$, and $\sigma$. \item Step 4: Calculate $\hat{\mu}$ by applying the formula in (2.17). Use Newton-Raphson method to solve equation (2.18) numerically for $\hat{\sigma}$ (The ”fsolve” built-in function in MATLAB could be applied). \item Step 5: Transform all the ”$J_i$”s linearly by $\Delta_i = \hat{\mu} + \hat{\sigma} J_i$, which means that to transform the endpoints of ”$J_i$”s linearly except for infinite endpoints. \item Step 6: Determine all of the elements specified in (2.23), and solve the equation numerically by applying the ”fsolve” built-in function in MATLAB. \item Step 7: Repeat steps 3 to 6 for $N$ times, and calculate the RMSE by applying the formula in (2.24). \end{enumerate} In step 2, we have two ways of generating cells from the standard Student’s $t$-distribution with degree of freedom $v (v > 2)$. The first way is the Fisher’s classification, i.e., we let $p_1(\theta) = \ldots = p_m(\theta) = \frac{1}{m}$, where $p_i(\theta)$ is defined as what (2.20) has illustrated. The endpoints can be solved when the above equations are satisfied. Hence, the cell intervals are constructed. The second way is the RP classification. From [6], we can obtain the RPs of the standard Student’s $t$-distribution with degree of freedom $v (v > 2)$, which are denoted as $R_1, \ldots, R_m$. The cell intervals, $\Delta_1 = (-\infty, \frac{R_1 + R_2}{2})$, $\Delta_j = (\frac{R_{j-1} + R_j}{2}, \frac{R_j + R_{j+1}}{2})$, where $j = 2, \ldots, m - 1$, and $\Delta_m = (\frac{R_{m-1} + R_m}{2}, \infty)$, are hence constructed. \subsection{Estimation Results of Simulation} The estimation results are listed in the following tables. In our simulation study, we set $n = 50, 100, 200, 400$, $m = 5, 10, 20$, $\beta = 1$, $\mu = 1$, $\sigma = 2$, and $N = 1000$. It is worth noticing that the true values of the parameters are selected without loss of generality. The aim of our simulation study is topare the estimation methods and choose the best ones. Hence, the true values of the parameters should be manipulated to be the same.\\ \begin{table}[!htbp] \caption{Simulation Results of Moment Estimation} \centering \begin{tabular}{|c|c|c|c|c|} \hline $n$ & 50 & 100 & 200 & 400 \\ \hline $\hat{\mu}$ & 0.9080 & 0.9732 & 0.9904 & 0.9932 \\ $\hat{\sigma}$ & 1.9603 & 1.9893 & 1.9963 & 1.9970 \\ RMSE($\hat{\mu}$) & 0.2823 & 0.1561 & 0.0909 & 0.0755 \\ RMSE($\hat{\sigma}$) & 0.4373 & 0.2329 & 0.1302 & 0.1065 \\ \hline \end{tabular} \end{table} \begin{table}[!htbp] \caption{Simulation Results of MLE} \centering \begin{tabular}{|c|c|c|c|c|} \hline $n$ & 50 & 100 & 200 & 400 \\ \hline $\hat{\mu}$ & 1.1744 & 1.0946 & 1.0463 & 1.0201 \\ $\hat{\sigma}$ & 2.2571 & 2.1283 & 2.0585 & 2.0215 \\ RMSE($\hat{\mu}$) & 0.2324 & 0.1399 & 0.0845 & 0.0529 \\ RMSE($\hat{\sigma}$) & 0.3754 & 0.2350 & 0.1528 & 0.0931 \\ \hline \end{tabular} \end{table} \begin{table}[htbp] \caption{Simulation Results of Minimum $\chi^2$ Estimation with Equiprobable Cells for $m=5$} \centering \begin{tabular}{|c|c|c|c|c|} \hline $n$ & 50 & 100 & 200 & 400 \\ \hline $\hat{\mu}$ & 1.0684 & 1.0493 & 1.0330 & 1.0299 \\ $\hat{\sigma}$ & 1.9415 & 1.9539 & 1.9628 & 1.9678 \\ \text{RMSE}($\hat{\mu}$) & 0.0850 & 0.0641 & 0.0427 & 0.0375 \\ \text{RMSE}($\hat{\sigma}$) & 0.1782 & 0.1281 & 0.0948 & 0.0817 \\ \hline \end{tabular} \end{table} \begin{table}[htbp] \caption{Simulation Results of Minimum $\chi^2$ Estimation with Equiprobable Cells for $m=10$} \centering \begin{tabular}{|c|c|c|c|c|} \hline $n$ & 50 & 100 & 200 & 400 \\ \hline $\hat{\mu}$ & 1.0488 & 1.0303 & 1.0222 & 1.0189 \\ $\hat{\sigma}$ & 1.9623 & 1.9732 & 1.9799 & 1.9834 \\ \text{RMSE}($\hat{\mu}$) & 0.0598 & 0.0380 & 0.0278 & 0.0244 \\ \text{RMSE}($\hat{\sigma}$) & 0.1564 & 0.1165 & 0.0797 & 0.0707 \\ \hline \end{tabular} \end{table} \begin{table}[htbp] \caption{Simulation Results of Minimum $\chi^2$ Estimation with Equiprobable Cells for $m=20$} \centering \begin{tabular}{|c|c|c|c|c|} \hline $n$ & 50 & 100 & 200 & 400 \\ \hline $\hat{\mu}$ & 1.0355 & 1.0224 & 1.0151 & 1.0127 \\ $\hat{\sigma}$ & 1.9832 & 1.9793 & 1.9947 & 1.9940 \\ \text{RMSE}($\hat{\mu}$) & 0.0444 & 0.0286 & 0.0193 & 0.0160 \\ \text{RMSE}($\hat{\sigma}$) & 0.1575 & 0.1091 & 0.0714 & 0.0672 \\ \hline \end{tabular} \end{table} \begin{table}[htbp] \caption{Simulation Results of RP-based Minimum $\chi^2$ Estimation for $m=5$} \centering \begin{tabular}{|c|c|c|c|c|} \hline $n$ & 50 & 100 & 200 & 400 \\ \hline $\hat{\mu}$ & 0.9915 & 0.9922 & 0.9972 & 0.9924 \\ $\hat{\sigma}$ & 2.0220 & 2.0184 & 2.0048 & 2.0062 \\ \text{RMSE}($\hat{\mu}$) & 0.1826 & 0.1242 & 0.0880 & 0.0787 \\ \text{RMSE}($\hat{\sigma}$) & 0.2055 & 0.1434 & 0.1026 & 0.0860 \\ \hline \end{tabular} \end{table} \begin{table}[htbp] \caption{Simulation Results of RP-based Minimum $\chi^2$ Estimation for $m=10$} \centering \begin{tabular}{|c|c|c|c|c|} \hline $n$ & 50 & 100 & 200 & 400 \\ \hline $\hat{\mu}$ & 0.9871 & 0.9939 & 0.9979 & 0.9965 \\ $\hat{\sigma}$ & 2.0659 & 2.0348 & 2.0217 & 2.0151 \\ \text{RMSE}($\hat{\mu}$) & 0.1033 & 0.0716 & 0.0498 & 0.0451 \\ \text{RMSE}($\hat{\sigma}$) & 0.1842 & 0.1237 & 0.0864 & 0.0790 \\ \hline \end{tabular} \end{table} \begin{table}[htbp] \caption{Simulation Results of RP-based Minimum $\chi^2$ Estimation for $m=20$} \centering \begin{tabular}{|c|c|c|c|c|} \hline $n$ & 50 & 100 & 200 & 400 \\ \hline $\hat{\mu}$ & 0.9957 & 0.9974 & 0.9980 & 0.9990 \\ $\hat{\sigma}$ & 2.1124 & 2.0639 & 2.0421 & 2.0346 \\ \text{RMSE}($\hat{\mu}$) & 0.0607 & 0.0449 & 0.0314 & 0.0269 \\ \text{RMSE}($\hat{\sigma}$) & 0.2021 & 0.1287 & 0.0906 & 0.0757 \\ \hline \end{tabular} \end{table} \newpage \subsection{parative Analysis} As we can see in Tables \ref{table:2.1} and \ref{table:2.2}, when the sample size is small, the uracies of the parametric estimation methods are low, since the RMSEs are nearly 0.3. We are not satisfied with these results, so our samples should be expanded, and the number of our cells should be increased. As the sample size increases, the uracies are getting higher than those when the sample size is small. As \( n = 400 \), the RMSEs are small enough for us to ept. In addition, we can clearly see from the tables above that moment estimation method and RP-minimum \( \chi^2 \) estimation method are slightly better than the other two methods. In the next chapter, further applications regarding to the four kinds of estimation methods will be implemented. We can observe the differences of the estimation behaviors in a more explicit way as further simulation studies are constructed.